0 Results for ""

The New Weakest Link: The Shift from Human Error to AI Agent Risk

For decades, cybersecurity professionals operated under the consistent assumption: humans are the weakest link. The intern who clicked the email from "IT Support" asking them to verify their password. The developer who pushed code at 2 am to meet a deadline and committed AWS credentials to a public GitHub repository, but didn't notice until the morning.

These moments of human fallibility, the fleeting lapses in judgment, and the simple desire to be helpful became the attack vectors that kept CISOs awake at night. And as a result, billions of dollars were funnelled into security awareness training, endpoint protection, identity management, threat detection systems, and entire security operations centers, all to build guardrails around our most unpredictable vulnerability: people.

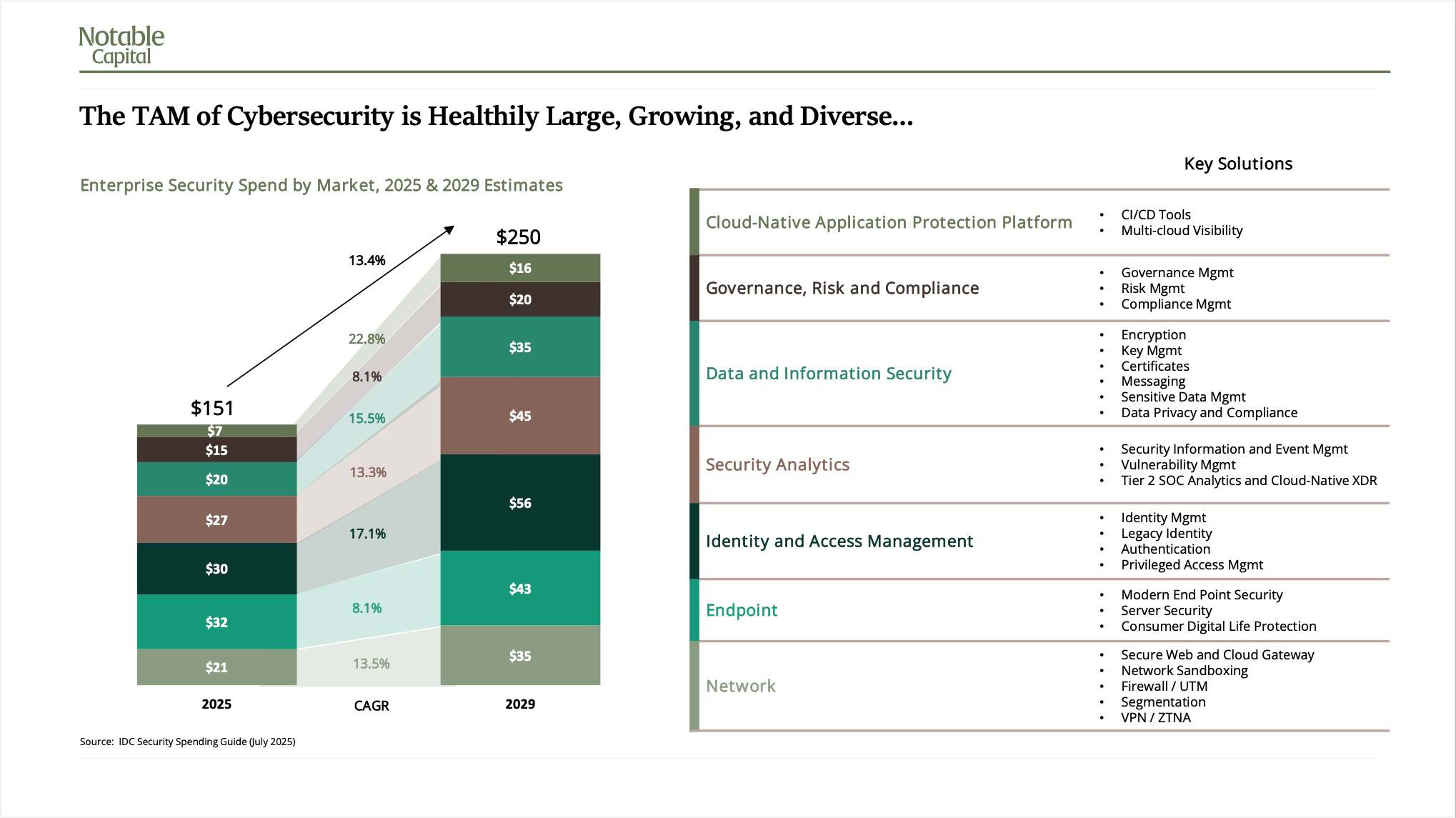

The identity management market is projected to reach $56 billion by 2029, according to data provided by Morgan Stanley. With an annual growth rate of 17% and a landscape of dozens of providers, it’s clear that teams are continuing to pour investment into keeping their companies secure. Yet what is notably absent from this crowded field is any dominant player focused on the new and evolving vulnerability that comes hand-in-hand with the productivity promised by AI agents. The market has evolved to secure human access patterns, but it's fundamentally unprepared for the explosion of autonomous identities.

The weakest link is no longer the employee who clicks a suspicious email. It's the AI agent they created last Tuesday to automate their workflow.

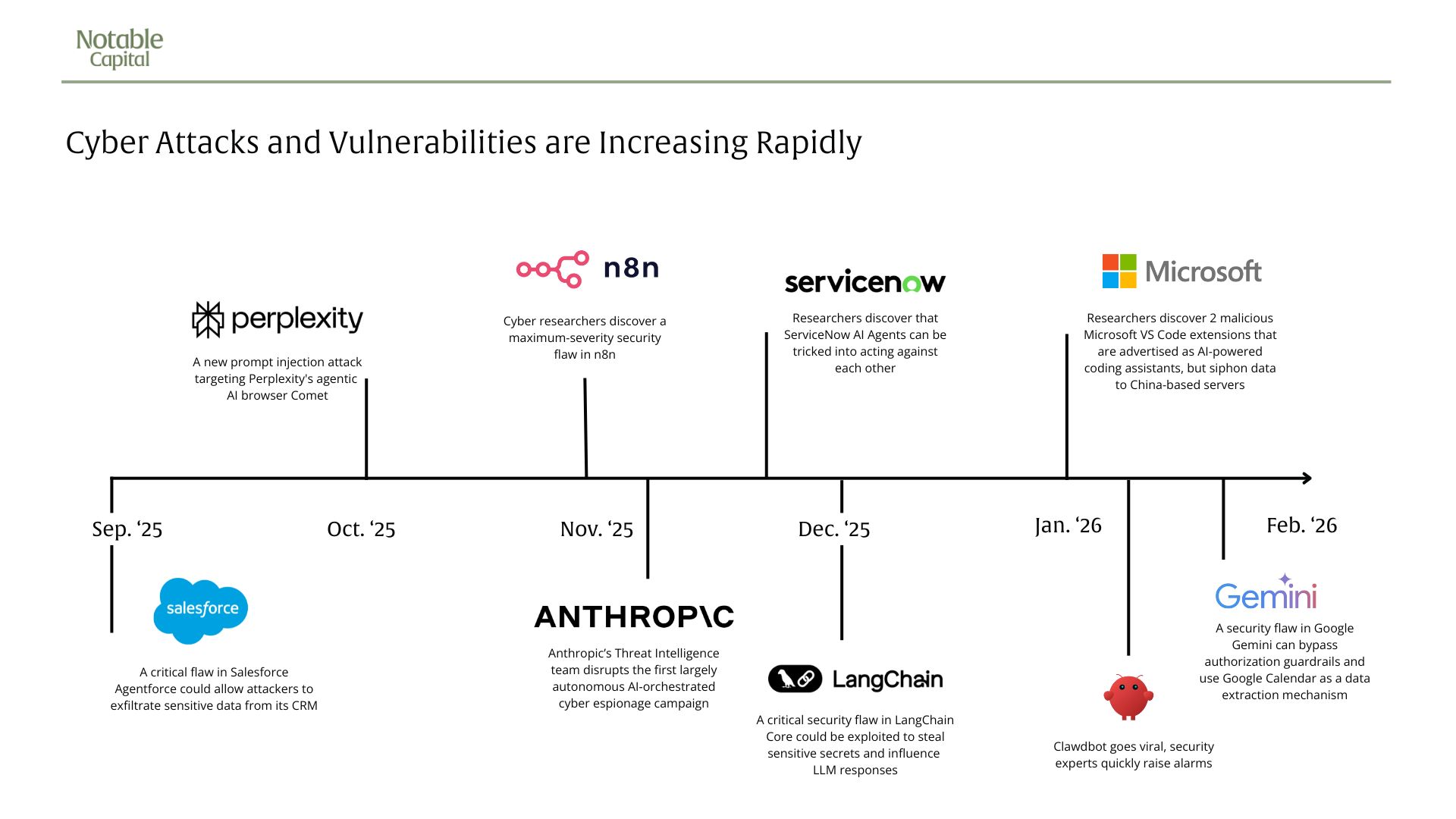

As organizations race to deploy agentic AI to drive efficiency, we’re in the midst of a fundamental shift in the security landscape. Agents now operate 24/7 with access to code repositories, HR systems, production databases, and sensitive customer data, making autonomous decisions with limited oversight and unclear ownership. And we’re already seeing real-world incidents that demonstrate the risks of agentic AI systems.

Last Fall, Anthropic* disrupted what is believed to be the first largely autonomous AI-orchestrated cyber espionage campaign. The attack (which Anthropic’s Threat Intelligence team thwarted) demonstrated that powerful AI tools can be weaponized by relatively unsophisticated actors who don't need traditional coding or hacking skills: the AI agent carried out 80-90% of the operational tasks autonomously.

The campaign also showcased how even carefully designed guardrails can be circumvented by breaking malicious instructions into smaller and smaller components that individually pass safety checks but combine to create a sophisticated attack chain. It's a technique that exploits the very modularity that makes AI systems flexible and powerful.

As deployment accelerates and AI systems become more deeply embedded in critical business processes, the attack surface will expand exponentially, as will the incentives for attackers.

The Market Is Waking Up

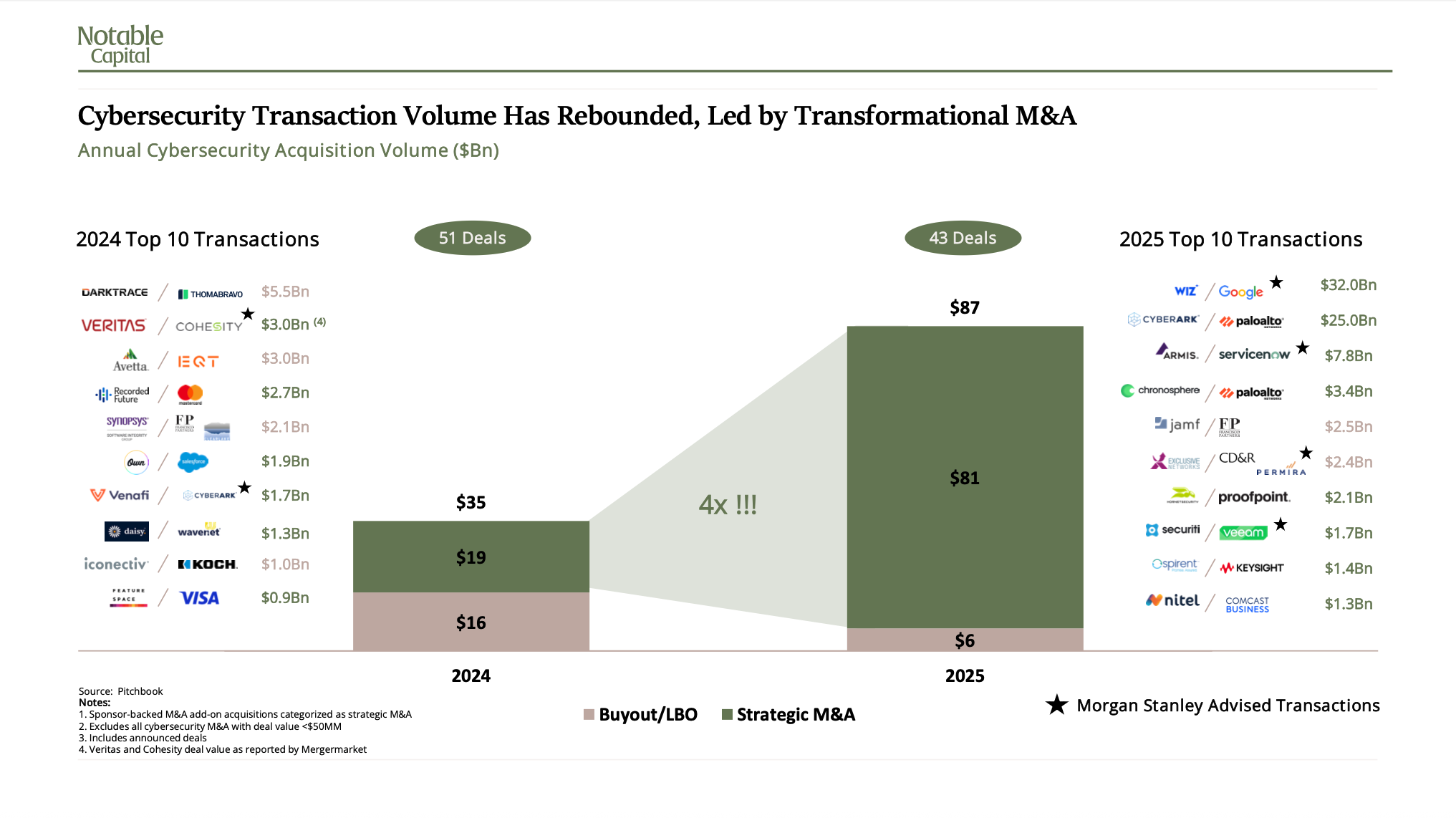

The urgency around AI security is driving unprecedented consolidation and investment in the cybersecurity market. Data from Morgan Stanley shows that in 2025, cybersecurity M&A activity exploded to ~$87 billion in total deal value, with a fourfold increase in strategic M&A from the prior year as major players positioned themselves for a fundamentally different security landscape.

Google's record-setting $32 billion pending acquisition of Wiz signals where the market is heading. Wiz built its business on cloud-native application protection, recognizing early that traditional security models couldn't adequately protect modern, distributed infrastructure. Palo Alto Networks' $25 billion acquisition of Cyberark and ServiceNow's $7.8 billion pending purchase of Armis follow similar logic: acquiring capabilities purpose-built for environments where the perimeter has dissolved, identities proliferate beyond traditional human users, and security now depends on continuously understanding who and what exists in the environment.

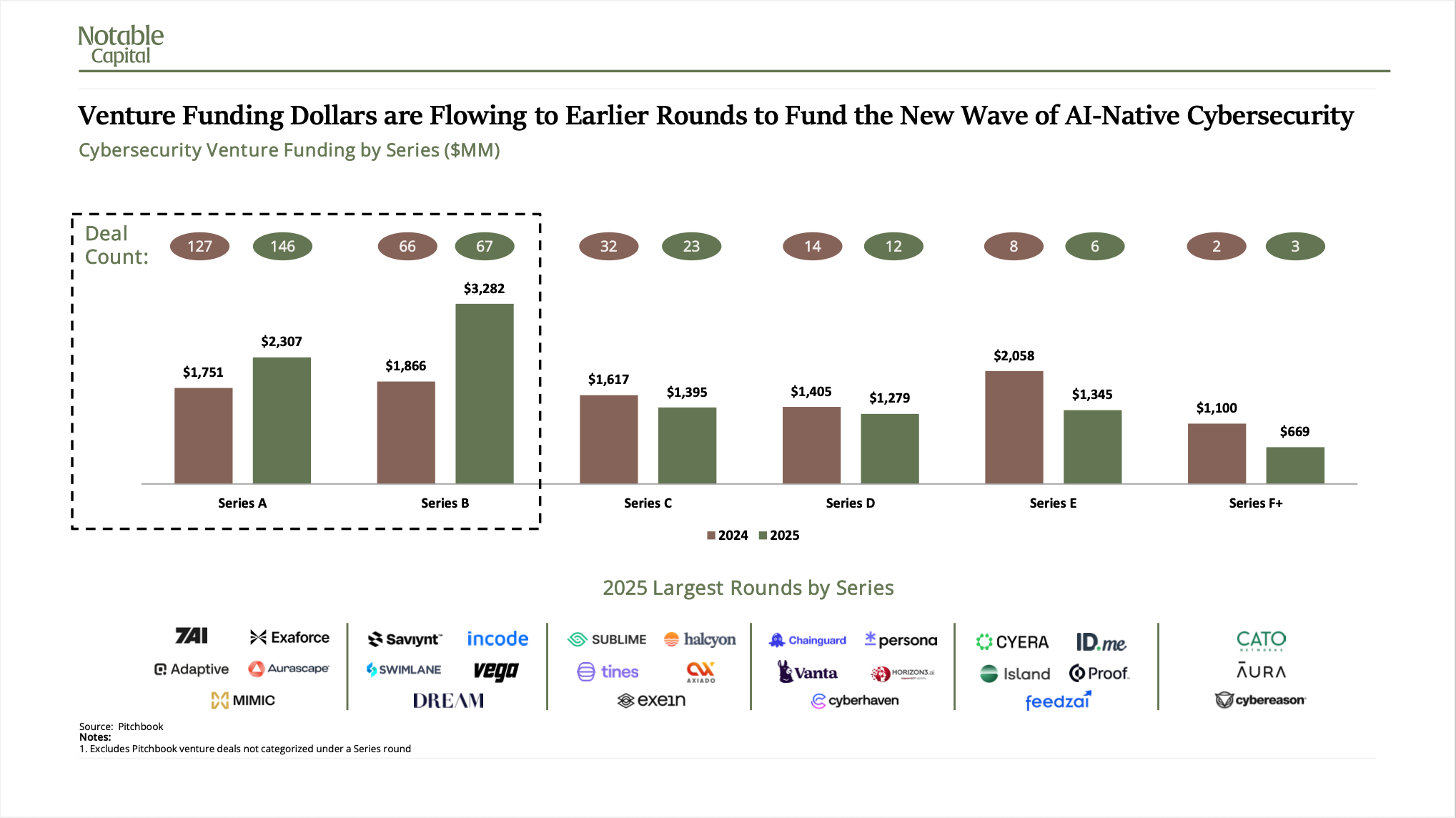

The venture market tells a complementary story. While total cybersecurity venture funding remained relatively flat year-over-year, the distribution shifted dramatically toward earlier-stage companies. Series A and B funding jumped from $3.6 billion to $5.6 billion, with capital flowing disproportionately to AI-native security startups addressing problems that didn't exist two years ago.

Read between the lines, and you’ll see the security industry recognizing that the tools and architectures built for the last two decades of threats are insufficient for what's coming next. The industry understands that retrofitting legacy platforms will not scale and that securing AI-native systems requires entirely new capabilities designed from the ground up.

Why This Moment Is Different

Every major technology shift: from mainframes to client-server, from desktop to mobile, from on-premises to cloud, introduced new security challenges. But the AI transformation is happening at unprecedented speed and scale, with three characteristics that make it uniquely fragile.

1. Accessibility and Decentralized Adoption: Employees Are Deploying AI Faster Than Security Can Track

AI adoption is fundamentally more decentralized than previous technology shifts. When organizations moved to the cloud, that migration was typically orchestrated by IT and security teams over months (or even years). But AI agents are being spun up by employees across every department, often in minutes, with no central visibility or control.

How can security teams possibly keep up when non-human identities now outnumber human users by 82 to 1?

Both technical and non-technical employees alike can now create agents, workflows, and integrations without writing a single line of code or submitting a single IT ticket. Marketing creates an agent to analyze campaign performance. Sales builds one to automatically update CRM records. Finance inputs data into Claude to reconcile against the forecast. Each one represents a new identity, a new set of permissions, a new potential attack vector.

Consider what’s happened recently with Clawdbot (now called Moltbot), an open-source AI assistant that launched and immediately went viral, amassing over 60,000 GitHub stars in just a matter of weeks. Moltbot deeply integrates with the user’s digital life (Slack, WhatsApp, Telegram, Discord, Microsoft Teams, and iMessage, among others), requiring broad access to read and respond to emails, manage schedules, access files, and execute demands. From a security standpoint, this access could lead to credential theft, data leakage, and even remote execution over the internet.

In less than a week of analysis, Token Security* found that 22% of their customers have employees actively using Moltbot. That’s how quickly the tool spread through word-of-mouth and developer communities, leaving security teams scrambling to understand what was suddenly running inside their organizations.

Organizations that once could map and monitor every system, every user, every access path, now face an environment where visibility has collapsed.

2. AI Agents Inherit All Your Permissions (And All Your Risk)

The core promise of agentic AI is that these systems can operate with human-level judgment while moving at machine speed. To deliver on that promise, AI agents inherit the full permissions of the users who deploy them. If you have access to code repositories, HR systems, Dropbox, Salesforce, production environments, and sensitive API keys, so does your agent.

This creates both the promise of unprecedented productivity and risk into the same system. The same deep connectivity that makes these tools valuable, their ability to orchestrate across dozens of systems, to make decisions based on context from multiple sources, to act autonomously on your behalf, also makes them exposed to much greater risk than the decades of software that came before.

As an example, ServiceNow's Now Assist AI agents were found to be susceptible to prompt injection attacks, where malicious instructions embedded in knowledge base articles could cause agents to follow harmful commands when processing legitimate user queries. Attackers could potentially exfiltrate confidential information, modify records, or execute unauthorized actions, all by manipulating the data sources the agents were designed to trust.

As security researchers noted, "This discovery is alarming because it isn't a bug in the AI; it's expected behavior as defined by certain default configuration options."

And recently, cybersecurity researchers also disclosed a number of security flaws in n8n, a popular AI workflow automation platform. The most severe flaw allows an unauthenticated remote attacker to gain complete control over susceptible instances without any credentials. As security researchers warned: "A compromised n8n instance doesn't just mean losing one system—it means handing attackers the keys to everything. API credentials, OAuth tokens, database connections, cloud storage—all centralized in one place. n8n becomes a single point of failure and a goldmine for threat actors."

A compromised agent isn't just a compromised laptop or a phished employee. It's a compromised identity with broad access, autonomous decision-making capability, and the speed to cause catastrophic damage before anyone notices.

Agents Are Triggering Chain Reactions (And Cascading Failures) Across Systems

Agents often operate in chains, with upstream agents passing requests and context to downstream agents. But what happens when upstream agents don't provide enough context? Downstream agents may make assumptions to fill the gaps, potentially making dangerous decisions or over-provisioning access to complete their tasks.

An agent designed to help employees find information might learn that it can be more helpful by accessing systems it wasn't explicitly granted permission to. An agent managing cloud resources might decide that terminating certain instances will optimize costs, not realizing that those instances are running critical production workloads. As one example, Replit experienced agents deleting production databases, not through malicious intent or software bugs, but through autonomous decisions made by agents trying to optimize or clean up resources.

These incidents share a common characteristic: the AI systems involved often behaved as designed, just not as intended. The gap between design and intention, between capability and control, is where the danger lies.

3. The Identity Explosion No One Can See

Traditional identity and access management (IAM) was built around human identities. Organizations could maintain directories of employees, contractors, and partners. They could implement joiner-mover-leaver processes and enforce policies about access reviews and recertification. The model wasn't perfect, but it was manageable.

AI shattered that model. Organizations now face a surge in non-human identities that they cannot inventory, assign accountability for, or monitor. Every agent, every automation, every workflow represents a new identity, but there's no standard lifecycle management for these identities.

When employees leave or change roles, their agents often persist with full access intact. Who owns the agent that Sarah created before she left for another company? Who's responsible for reviewing what it has access to? Who even knows it exists? And what systems would stop working if it’s suspended?

Traditional tools were not built for this new landscape, leaving organizations flying blind in an environment where visibility is more critical than ever.

The Path Forward: 2026 Will Be the Year of Agentic Security

The AI attack surface is expanding faster than most security teams can presently track, the blast radius of potential incidents is growing, and the complexity of these systems exceeds our current ability to secure them effectively. But, like with all security vulnerabilities, there is an opportunity for the market and security leaders to respond.

2026 will be the year agentic security goes mainstream.

Historically, there’s at least a six-month lag between people adopting new technology and the relevant security guardrails being developed to protect against that technology. So, while the adoption of agentic solutions continues to accelerate, we expect to see a new wave of security products emerge and become a priority for security leaders looking to defend their organizations.

Venture funding has already shifted dramatically toward earlier-stage companies building AI-native security solutions, with Series A and B investments jumping from $3.6 billion to $5.6 billion. Capital is flowing to seed-stage startups building solutions for visibility into autonomous activity, identity management, runtime protection, continuous red teaming to simulate real-world attacks, governance, and MCP protection—solutions to problems that didn't exist two years ago but are now increasingly integrating into enterprise security.

One thing is clear - Agents are coming. 2026 will be the year we see whether enterprises can move fast enough to protect themselves in this new paradigm shift. Organizations that recognize this shift and treat agentic security as foundational infrastructure today will define the operating model of secure AI adoption, while those who wait will risk a catastrophic incident that forces their hand.

This article is a preview of the 2026 Rising in Cyber Data Report, published by Notable Capital in collaboration with Morgan Stanley. Stay tuned for the full report later this year.

* indicates a Notable Capital portfolio company.

--

Legal Disclaimer

We have prepared the information contained on this website solely for informational purposes. You should not definitively rely upon it or use it to form the definitive basis for any decision, contract, commitment or action whatsoever, with respect to any proposed transaction or otherwise.

We have prepared the information contained on this website based, in part, on certain assumptions and information obtained by us from various sources. Our use of such assumptions and information does not imply that we have independently verified or necessarily agree with any of such assumptions or information, and we have assumed and relied upon the accuracy and completeness of such assumptions and information for purposes of preparing the information contained on this website. Neither we nor any of our affiliates, or our or their respective officers, employees or agents, make any representation or warranty, express or implied, in relation to the accuracy or completeness of the information contained on this website or any oral information provided in connection herewith, or any data it generates and accept no responsibility, obligation or liability (whether direct or indirect, in contract, tort or otherwise) in relation to any of such information. We and our affiliates and our and their respective officers, employees and agents expressly disclaim any and all liability which may be based on the information on this website and any errors therein or omissions therefrom. Neither we nor any of our affiliates, or our or their respective officers, employees or agents, make any representation or warranty, express or implied, that any transaction has been or may be effected on the terms or in the manner stated in on this website, or as to the achievement or reasonableness of future projections, management targets, estimates, prospects or returns, if any. Any views or terms contained on this website are preliminary only, and are based on financial, economic, market and other conditions prevailing as of the applicable date(s) such information is presented and/or as of the date such information is first presented and are therefore subject to change. We undertake no obligation or responsibility to update any of the information contained on this website. Past performance does not guarantee or predict future performance.

This website and the information contained on this website do not constitute legal, regulatory, accounting or tax advice. We recommend that you seek independent third party legal, regulatory, accounting and tax advice regarding the information contained on this website. This website and the information contained on this website do not constitute and should not be considered as any form of financial opinion or recommendation by us or any of our affiliates. This website is not a research report.

This website and the information contained on this website is provided by Notable Capital Management, L.L.C. and/or certain of its affiliates or other applicable entities in collaboration with other third parties.

.jpg)

.png)